The US Internal Revenue Service (IRS) has partnered with a Virginia-based private identification firm which requires a facial recognition selfie among other things, in order to create or access online accounts with the agency.

According to KrebsonSecurity, the IRS announced that by the summer of 2022, the only way to log into irs.gov will be through ID.me. Founded by former Army Rangers in 2010, the McLean-based company has evolved to providing online ID verification services which several states are using to help reduce unemployment and pandemic-assistance fraud. The company claims to have 64 million users.

Some 27 states already use ID.me to screen for identity thieves applying for benefits in someone else’s name, and now the IRS is joining them. The service requires applicants to supply a great deal more information than typically requested for online verification schemes, such as scans of their driver’s license or other government-issued ID, copies of utility or insurance bills, and details about their mobile phone service.

When an applicant doesn’t have one or more of the above — or if something about their application triggers potential fraud flags — ID.me may require a recorded, live video chat with the person applying for benefits. -KrebsonSecurity

For the sake of his article, Krebs made himself a guinea pig and signed up with ID.me to describe the lengthy process that “may require a significant investment of time, and quite a bit of patience.”

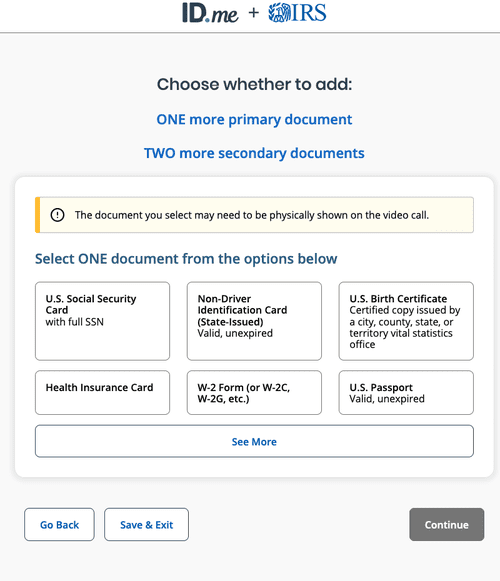

After uploading images of one’s driver’s license, state issued ID or passport.

If your documents get accepted, ID.me will then prompt you to take a live selfie with your mobile device or webcam. That took several attempts. When my computer’s camera produced an acceptable result, ID.me said it was comparing the output to the images on my driver’s license scans. -KrebsonSecurity

Once that’s accepted, Id.me will ask to verify your phone number – and will not accept numbers tied to voice-over-IP services such as Skype or Google Voice.

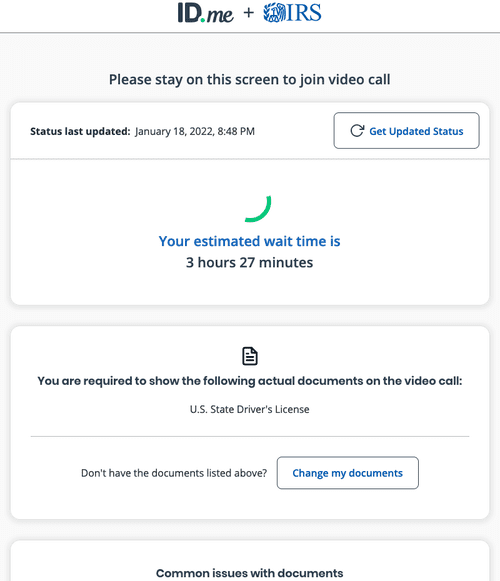

Krebs’ application became stuck at the “Confirming your Phone” stage – which led to a video chat (and having to resubmit other information) which had an estimated wait time of 3 hours and 27 minutes. Krebs – having interviewed ID.me’s founder last year – emailed him, and was able to speak with a customer service rep one minute later “against my repeated protests that I wanted to wait my turn like everyone else.”

As far as security goes, CEO Blake Hall told Krebs last year that the company is ‘certified against the NIST 800-63-3 digital identity guidelines” and “employs multiple layers of security, and fully segregates static consumer data tied to a validated identity from a token used to represent that identity.”

“We take a defense-in-depth approach, with partitioned networks, and use very sophisticated encryption scheme so that when and if there is a breach, this stuff is firewalled,” said Hall. “You’d have to compromise the tokens at scale and not just the database. We encrypt all that stuff down to the file level with keys that rotate and expire every 24 hours. And once we’ve verified you we don’t need that data about you on an ongoing basis.”

Krebs believes that things such as facial recognition for establishing one’s identity is a “Plant Your Flag” moment, because “Love it or hate it, ID.me is likely to become one of those places where Americans need to plant their flag and mark their territory, if for no other reason than it will probably be needed at some point to manage your relationship with the federal government and/or your state.”

US government agencies are using facial recognition tech with almost no oversight

Ken Macon via Reclaim the Net, July 7, 2021

There are a few appropriate uses for facial recognition technology in the hands of law enforcement. These instances should be exceedingly rare, like tracking down a confirmed terrorist or locating an armed and dangerous suspect in the middle of a crowd. Beyond extreme emergency scenarios, facial recognition technology should not be used.

Unfortunately, it’s being used by a ton of people at nearly two dozen government agencies for a multitude of reasons with absolutely zero oversight, minimal tracking of use, and effectively no rules detailing when and how it is to be applied. It’s clear we have a huge problem when mundane agencies like the IRS, FDA, and USPS employ facial recognition technology. When was the last time a Food and Drug Administration agent went hunting for terrorists?

Considering the IRS seems poised to become part of the police state, this is especially troubling.

The Government Accountability Office’s (GAO) report on facial recognition usage is both startling and infuriating. It shows a combination of incompetence and abuse that make for a very dangerous recipe, especially in the hands of government. There is basically no oversight. The agencies using it can’t keep their stories straight about how they use it or even which companies they employ to service the equipment. It’s all completely opaque and nobody in Congress seems to care.

The federal oversight agency Government Accountability Office (GAO) has released a report on the use of facial recognition by government agencies. The main revelation in the report is that there is little internal oversight on the use of the technology.

There’s no denying that facial recognition technology is useful for criminal investigations. However, the technology is not perfect as it has a tendency for false positives and the implication can be sending law enforcement officers after the wrong person. It’s also generally invasive and promotes a surveillance state.

Private companies providing the tech, such as Clearview, have only made the reputation of facial recognition worse.

It came as no surprise to learn that intelligence and law enforcement agencies such as the FBI, DEA, ATF, TSA, and Homeland own or use facial recognition tech. According to the report, 20 agencies use the technology, including some that you wouldn’t expect, such as the IRS, FDA, and US Postal Service.

Perhaps more concerning is the agencies’ use of Clearview, a private company that’s been proven to be dishonest and has been investigated and sued in the recent past. Half of the agencies have contracts or have used Clearview’s technology, making the controversial company the most popular third-party facial recognition provider.

The report states that these agencies use “state, local, and non-government systems to support criminal investigations.” However, the agencies are not aware what non-government systems their employees use.

“Thirteen federal agencies do not have awareness of what non-federal systems with facial recognition technology are used by employees. These agencies have therefore not fully assessed the potential risks of using these systems, such as risks related to privacy and accuracy. Most federal agencies that reported using non-federal systems did not own systems. Thus, employees were relying on systems owned by other entities, including non-federal entities, to support their operations.”

Some agencies were not even aware how often their employees use this technology, and had to conduct polls to find out. Depending on data that relies on honest self-reporting is not the best idea.

One of the agencies initially told GAO that it does not use non-federal systems. However, “after conducting a poll, the agency learned that its employees had used a non-federal system to conduct more than 1,000 facial recognition searches.

The careless use of an inaccurate technology by these agencies is also potentially a violation of the law and could result in the public learning about ongoing investigations.

“When agencies use facial recognition technology without first assessing the privacy implications and applicability of privacy requirements, there is a risk that they will not adhere to privacy-related laws, regulations, and policies. There is also a risk that non-federal system owners will share sensitive information (e.g. photo of a suspect) about an ongoing investigation with the public or others.”